Years ago, building 113 used to be the Livermore laboratory’s headquarters; the director ruled his domain from its fifth floor. There is a large conference room in B113, and on its front door there is a sign that proudly proclaims the conference room to be the von Neumann Room. I once had an office in that building, and I often wondered why the Laboratory would have a conference room dedicated to John von Neumann. After doing research for this history, I know why.

When I arrived as a physicist at the Laboratory, I was assigned a group leader, much as one is assigned a squad leader when one joins the Army. Kind of like a squad leader in the Army, my group leader squared me away, showed me the ropes, and pointed me in the right direction to start producing some research. My group leader was a man named George Maenchen, he is one of the most outstanding physicists I’ve ever had the privilege to work with in my career. He had a rich Austrian accent, he looked a bit like Santa Claus, he smoked a pipe, and he had tobacco stains running down his shirt beneath his Laboratory badge—which was held together with Scotch tape. I’ll talk more about George in a later blog, and I hope to God he doesn’t read this or he’ll be banging on my front door during this mandatory stay-at-home.

George lectured me right away, “Tom, we are not like Thomas Edison. We don’t test a thousand light bulbs to figure out what is the best filament. Here we model everything first on a computer, then we do a test to confirm what we already know from our modeling.” Upon reflection, I noticed after a while that at Livermore there was what I call a distinct “computer culture” among the Laboratory’s scientists and engineers. That is a legacy of John von Neumann.

Before we dive into today’s blog, a word about the Teller-Ulam paper of March 1951. I read through that document at least five times to make sure I understood what they were saying. I believe what I tell you in this blog, and in my upcoming book, is a correct interpretation of that paper, and I’ve confirmed that with some eminent physicists here in Livermore – including two who worked with Teller over the years. My apologies to anyone who doesn’t like to read about papers written by a physicist and a mathematician. I’m making a point of this because that paper, and how it is interpreted, are very controversial in the nuclear design world I’ve been living in for the past few decades.

Please enjoy reading these highlights from my upcoming book, From Berkeley to Berlin:

When Lawrence took up the challenge of helping the country build a thermonuclear program, it was quickly apparent the problem would be much more complex than anything he had faced when he helped start an atomic program during a world war. It was one thing to configure a heap of fissionable fuel into a critical mass; it is quite another to figure out how to raise the temperature of thermonuclear fuel to a temperature like that in the center of the Sun, and then hold that temperature long enough for nuclei to release fusion energy. That kind of challenge meant the ability to make and use mathematical models were absolutely necessary conditions to design a thermonuclear weapon. Thinking back to the mathematical tools available in post-war America, how on earth could one do that?

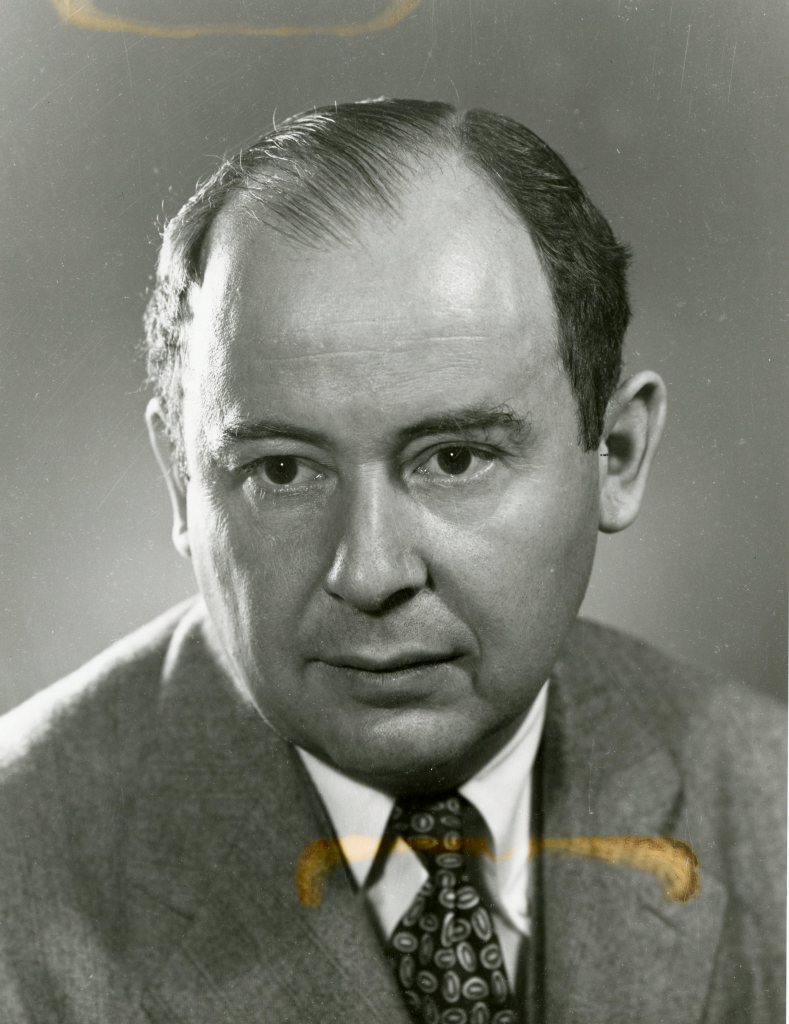

Fortunately for Americans, we were home to the world’s greatest mathematician of the twentieth century: a Hungarian born Jew, a Catholic convert, a man who hated Nazis and Communists, a patriot. One of the most influential intellectuals to usher in the thermonuclear age was John von Neumann, who had a passion for mathematics; he published his first mathematics paper at the age of 17, while he was still in high school. In 1928, he wrote the textbook Mathematical Foundations of Quantum Mechanics, which is still in print. The next year, he and fellow Hungarian Eugene Wigner received invitations to join the faculty of the Institute for Advanced Study in Princeton, and in 1930, they joined the company of Albert Einstein in one of America’s most influential institutions.

Von Neumann had a brief experience living in a totalitarian state after World War I, when Communists under Béla Kun took over Hungary, and the experience made him an ardent anti-Communist. In 1937 he became an American citizen and tried to enlist in the Army but was turned down because of his age. Instead, the Army hired him as a consultant, and his involvement with the military continued for the rest of his life. In 1938, he returned to Europe and married Klári Dán, a talented athlete who had won the national figure skating championship of Hungary when she was fourteen. John and Klári left Europe after their wedding with “an unforgiving hatred for the Nazis, a growing distrust of the Russians, and a determination never again to let the free world fall into a position of military weakness that would force the compromises that had been made with Hitler while the German war machine was gaining strength.

Von Neumann’s role in helping to usher in the thermonuclear age had to do with a fascination he had to use machines to do mathematical calculations. In the spring of 1936, Alan Turing, a 24-year-old British mathematician, wrote an article for the Proceedings of the London Mathematical Society titled “On Computable Numbers, With an Application to the Entsheidungsproblem.”* In the first section of his paper, titled “Computing Machines,” Turing laid out the principles needed for a machine to do mathematical calculations. That paper helped launch computer science.

Von Neumann read the article and invited Turing to join him in Princeton. Turing left England from Southampton in September 1936 on the liner Berengaria, after buying a sextant from a London merchant, presumably to learn navigation at sea. He arrived at Princeton and von Neumann helped enroll him as a doctorate student in mathematics. A mechanical device that applied Turing’s principles from his 1936 article made it a ‘Universal Turing Machine,’ a label applied to practically all modern digital computers.

Turing turned down an offer to stay as an assistant to von Neumann and instead returned to England, where he devoted himself to the field of cryptology. In World War II he was a central figure in the successful British effort to crack the German military’s Enigma Code, a service to the British that was portrayed in the popular Hollywood movie The Imitation Game. Turing and von Neumann left lasting impressions on each other, and their collaboration in the years 1937 and 1938 led both to devote a substantial portion of the rest of their lives to developing computer science.

Sometime in August or September 1944, von Neumann witnessed a demonstration at the Aberdeen Proving Grounds of the Army’s Electronic Numerical Integrator and Computer, or ENIAC—a product of John W. Mauchly and J. Presper Eckert. Mauchly was an electronics instructor at the Moore School of Electrical Engineering of the University of Pennsylvania, in Philadelphia. There he met Eckert, a native Philadelphian whose first job while in high school had been at the television research laboratory of Philo Farnsworth, a job that left him with an excellent understanding of electronics.

The ENIAC could add 5,000 numbers or do 357 ten-digit multiplications in one second, however it was not easy to set up. It was programmed by setting a bank of ten position switches and connecting thousands of cables by hand, a process that could take hours to days. Yet, as von Neumann watched Mauchly and Eckert demonstrate their machine, he could see how valuable it could be to the Super project.

For all its sophistication, the ENIAC did not have all the attributes of a Universal Turing Machine; von Neumann hoped to fix that by building his own computer at the Institute for Advanced Study. The result was a machine that could do fast, electronic, and completely automatic all-purpose computing. He called it the Mathematical and Numerical Integrator and Computer, or more familiarly, the MANIAC. The computer stood six feet high, two feet wide, and eight feet long, and weighed 1,000 pounds. Von Neumann hoped to see his computer used to help design a Super, but how does one configure a computer program to do that? The answer to that would come from a member of Teller’s group at Los Alamos.

* * *

Stan Ulam, that illustrious member of the Director’s Committee, had an inspiration. While recovering from a bout of encephalitis in 1946, his doctor told him to lie still and not think. To the Polish mathematician that sounded like asking him not to breathe. He passed his time playing solitaire, and despite his doctor’s warning, he began to calculate the probability of winning a game. To do that he needed statistics based on random distributions of cards in the deck.

The number of card combinations was too great to deal adequately with a mathematical formula; Ulam came to realize it would be easier to get statistics by playing the game repeatedly with random starting cards and recording the results. He applied those thoughts to the Super. What if instead of keeping track of randomly distributed playing cards, he kept track of how random neutrons behaved inside the Super?

Ulam imagined he could randomly select a neutron, and by applying appropriate probable outcomes, he could track it to its fate. If he repeated the process for thousands of neutrons with randomly selected starting values, he could build up a statistical basis on which to determine the outcome for a flow of neutrons in a real problem. One member of Teller’s group, Nick Metropolis, gave a name to Ulam’s methodology: he called it a Monte Carlo calculation because it resembled a game of chance.

Applying a Monte Carlo method to a fluence of neutrons traveling through the Super required millions of calculations, so it seemed Ulam’s idea was impractical, except the digital computer had been invented. Doing Monte Carlo simulations became a major driver for involving the digital computer in thermonuclear research. A duplicate of von Neumann’s MANIAC was constructed at Los Alamos to do thermonuclear calculations using, among other things, the Monte Carlo methodology.

In January 1950, after Teller and Gamow had published their design paper for the Super, Ulam decided to use his Monte Carlo method to see if the Teller/Gamow scheme would work. Amidst the confusion of getting a computer to Los Alamos, Ulam recruited a mathematician he had met at the University of Wisconsin, Cornelius J. Everett, to assist him with the calculations. Since they did not yet have full access to a computer, these calculations followed a mathematical schema worked out by Ulam in which intelligent estimates were used to calculate nuclear reactions. The work was painstakingly slow and laborious, and Ulam had to bring in an army of human computers, including his wife Francoise, to do much of the arithmetic.

By early March 1950, Ulam published a report. In the introduction, he said it was the product of hand calculations; using a computer to make Monte Carlo calculations would have to follow at a later date, as he explained, “One very unorthodox feature of our calculation is the use of ‘guesses’ or estimates of values of multi-dimensional integrals.” Several paragraphs later, he dropped his bombshell: “The result of the calculations seems to be that the model considered is a fizzle.” In other words, after months of mind-numbing calculations, he concluded ions in the Super did not retain energy long enough to ignite fusion.

When Teller read the report, he was livid. For years, he had been extolling his design of the Super, and now Ulam was telling him it was flawed. Ulam wrote to von Neumann, “He was pale with fury yesterday literally—but I think he is calmed down today.” In a classic example of shooting the messenger, Teller persuaded Bradbury to disband the Director’s Committee and Gamow was not pleased.

That summer, Fermi arrived to go over the calculations with Ulam. To be sure he understood Ulam’s approach to the problem, Fermi asked Ulam to review his methodology one more time and Ulam explained his assumptions for setting up the problem. Fermi and Ulam cloistered themselves and redid the calculations, this time looking at how the Super would propagate thermonuclear reactions once it was ignited. They repeated the earlier time-step calculations in a sort of competitive environment, with Ulam and Everett working as one team, and Fermi working in parallel, assisted by Miriam Planck, a LASL employee who carried out many of the calculations on a sophisticated adding machine. By the end of the summer, Fermi was satisfied that Ulam’s earlier conclusions had been correct.

Von Neumann decided to check Ulam’s results himself. He duplicated Ulam’s schema on the MANIAC in Princeton, doing finer calculations, including Monte Carlo simulations. A key programmer for the Monte Carlo calculations was von Neumann’s wife, Klári. She had worked with the Census Bureau during the war and had gained an appreciation for building algorithms for statistics. Slowly, as the months of May and June went by, the results began to come in. The computer calculations confirmed Ulam’s original finding.

Ulam’s idea was similar to Teller’s original concept for the Super, but with a few important distinctions. Ulam suggested using one atomic bomb, which he called a primary, to compress another atomic bomb, called a secondary, placed adjacent to it. Instead of using an atomic bomb to initiate fusion reactions, as Teller had suggested, Ulam wanted to use an atomic bomb to compress another fission device.

His point was that imploding an atomic bomb with high explosives was shown to be a means to achieve an atomic explosion, but the amount of compression garnered from high explosives was limited. The much greater energy from an atomic blast could conceivably compress a secondary much more, which meant more nuclear fission. This was not the classic Super as Teller had envisioned it, but a means to achieve more yield from an atomic device.

Teller’s conversation with Ulam made him rethink his earlier ideas about compressing the deuterium in the Super; he thought compression did not matter since the increase in the rate of nuclear reactions through compression was offset by the increased rate radiation escaped from the Super. It wasn’t only Teller who held to this idea: Fermi, Bethe, von Neumann, and Gamow had not suggested compressing the fuel.[xii] Perhaps Ulam’s depressing news that the Super didn’t work made Teller reconsider the physics behind the Super’s design, and it opened his mind to alternative ideas.

Teller had considered compression to be a linear effect; there was a one-for-one tradeoff between reaction rates and escaping photons. According to Fermi’s calculations during the Manhattan Project that is true to first order, but there are subtle second and third order effects favoring fuel compression. For instance, a compressed fuel offered a smaller volume to be heated, making energy losses more manageable. Once Teller understood Ulam’s concept, he transformed it to an idea of using the radiation from the atomic primary to surround, not another atomic bomb, but a thermonuclear secondary, and thereby compress it. The physicist and the mathematician eagerly explored ideas and Teller and Ulam agreed to jointly write their collective thoughts into a paper. It was March 1951.

Their article was 20 typewritten pages long, double-spaced, with four figures. In their introduction they laid out their message: “By an explosion of one or several conventional auxiliary fission bombs, one hopes to establish conditions for the explosion of a ‘principal’ bomb …a thermonuclear assembly.” They offered two alternative approaches to applying the new idea, and over the decades to come, both approaches were found to be useful.

In the years following, a controversy arose about who had invented the hydrogen bomb—was it Teller or Ulam? Teller later claimed it was the work of many people, but certainly the two main collaborators were Teller and Ulam. They needed each other. Their joint paper was remarkable in that, having been written by a mathematician and a theoretical physicist, it had surprisingly few mathematical equations. Their article, written in clear and distinct language, became a landmark publication. In one stroke, it pushed aside an obstacle stalling the Super almost to failure.

While Teller and Ulam established the concept of a workable Super, Teller’s assistant, Frédéric de Hoffman, solidified the idea into a reasonable design. One month after the Teller-Ulam article was published, de Hoffman wrote a document that laid out the characteristics of a new Super. He called the device a “Sausage.” He insisted on using Teller’s name as the sole author, saying that all the ideas in this second paper owed their origin to Teller.

The exact materials, dimensions, shape, and densities that made up an actual device still had to be determined. The year before, Fermi had sent Teller one of his graduate students, Richard Garwin, to help during the summer months. A greater level of design emerged three months after the Teller-Ulam article when Garwin wrote a paper that gave more precise physical characteristics for a warhead. Teller claimed that it was Garwin who became the essential link between the design physicists and the engineers who were responsible to manufacture a thermonuclear device. Wheeler’s assistant, Ken Ford, thought Garwin’s paper “set forth an actual workable design of a thermonuclear weapon.”

Tom,

I’m not sure that MonteCarlo played that big a role in the neutronics calculations on the ENIAC that vonNeuman did on his Super calculations. I’m thinking it was difusion neutronics.

I should tell you sometime of the connection of vonNeuman’s MANIAC at IAS and Ridge Vnyds. Very interesting story. You can call me (505-660-8712).

Have you seen NickLewis PhD thesis last Fall at Univ of Wisconsin on early history of computing at LASL? You must read it. If not, I can get you a copy.

LikeLike

Tom, I’m not sure if this comment got to you, so I’m repeating it. Thank you for sharing your time with me, I appreciate it.

I’ve had a night to think about your comment Tom. Certainly, I think physicists would want to use Fick’s Law to predict the dispersion of neutrons within the Super. I emphasized Monte Carlo calculations in my story because Ulam himself emphasized their importance in his autobiography, and in an interview he gave to a Los Alamos public affairs officer. I even quoted him saying he literally threw dice while doing calculations with Everett.

I think I remember reading in George Dyson’s book Turing’s Cathedral that Nick Metropolis went to IAS and did MANIAC computer calculations for the Super while working with von Neumann’s wife Klari. Again, what I got from that was that they were using Monte Carlo algorithms.

With all this being said Tom, I would be very interested in learning about alternative algorithms being used that you are aware of. Of course, I may have been prejudiced in my treatment of Monte Carlo because it’s such a powerful way to build statistics and come up with accurate calculaitons. Think how George Maenchen used Monte Carlo calculations in the 1970s to design the Super.

LikeLike

Tom,

The reason I skeptical of use of MC on the ENIAC is I’m not sure they had random number generators on-line at that point in time. MC neutronics in that era used, I believe, tables of random numbers. That’s what they used for MC calculations then using Fermi’s Trolly. I’m checking with ArtForster on this. Will get back to you.

LikeLike

For neutron transport, I think much of it back in the early ’50’s was diffusion theory, which was found to be surprisingly good. They did have Bengt Carlson’s discrete ordinates/Sn method, but I think it was too expensive to use on ENIAC/MANIAC. And MC, with any kind of decent statistics, even more expensive.

LikeLike

I’ve had a night to think about your comment Tom. Certainly, I think physicists would want to use Fick’s Law to predict the dispersion of neutrons within the Super. I emphasized Monte Carlo calculations in my story because Ulam himself emphasized their importance in his autobiography, and in an interview he gave to a Los Alamos public affairs officer. I even quoted him saying he literally threw dice while doing calculations with Everett.

I think I remember reading in George Dyson’s book Turing’s Cathedral that Nick Metropolis went to IAS and did MANIAC computer calculations for the Super while working with von Neumann’s wife Klari. Again, what I got from that was that they were using Monte Carlo algorithms.

With all this being said Tom, I would be very interested in learning about alternative algorithms being used that you are aware of. Of course, I may have been prejudiced in my treatment of Monte Carlo because it’s such a powerful way to build statistics and come up with accurate calculaitons. Think how George Maenchen used Monte Carlo calculations in the 1970s to design the Super.

LikeLike

Tom,

Talked to ArtForster. He thought that in the early ’50’s, they might have been using some form of MC on calculations for Teller’s Super on MAINAC. But he wasn’t sure where they were getting their random numbers.

He relayed that the best source of such information would be the book of several yrs ago “ENIAC In Action”. Have you checked in that book? He said there were several chapters on Super calculations.

LikeLike

Thanks Tom. Frankly, I’m not going to go too deeply into the specific algorithms used to do Super calculations – the editors would squash that. I had to tie in the invention of digital computers with the origins of a thermonuclear age, and the story of how Monte Carlo got started seemed to me to be a good way to do that. It tied von Neumann and Ulam together in one handsome knot.

By the way, I had to create a random number generator as a homework assignment once, and I simply used a mathematical schema that started by noting the time on a digital clock that was accessible to the computer. It was an imperfect generator, but it worked fairly well. Perhaps Ulam did something similar – imperfect but simple and good enough. After all, he needed to get an answer quickly.

LikeLike

That makes sense to me not to go too deeply into the algorithms. As a physicist, that’s what interests me. But for a broader audience you’re aiming for, not so much.

LikeLike

Tom, this was an exceptional article. It was the first time that I read an account of the Ulam-Teller collaboration that made sense. Only someone who was familiar with the actual facts could have gotten this right. Also, I had never taken the time to understand Richard Garwin’s role. During the anti-nuclear protests, he was an ever present protester who received much praise for his “credibility.” Your article puts his contribution in perspective.

LikeLike

Thanks Harry. I admit, it took time to figure it out. Tom

LikeLike